Amid increasing concerns about AI-generated disinformation and Deepfakes, I’ve been receiving more questions on how individuals and companies can protect themselves.

Amid increasing concerns about AI-generated disinformation and Deepfakes, I’ve been receiving more questions on how individuals and companies can protect themselves.

The issue is urgent and timely. The World Economic Forum described AI-generated disinformation as the number one threat this year, with a surge in incidents.

Just this week, an Asian bank was scammed out of 25 million USD by a digitally recreated avatar of their CFO ordering money transfers.

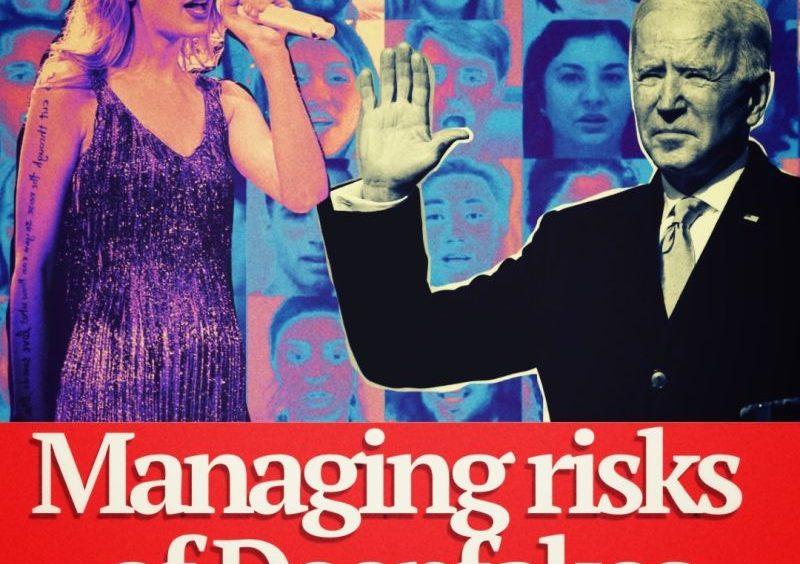

Previously, explicit Deepfake images of Taylor Swift flooded social media.

In 2024, the Year of Elections, with 68 countries heading to the polls, AI-generated disinformation could majorly threaten democratic processes.

Yet, while celebrities help raise awareness, the majority of Deepfake victims are likely to be ordinary people and companies.

So, what strategies are available to protect people?

First, here’s what may not work:

Detection tools seem intuitive for spotting Deepfakes but have three fundamental flaws:

1. They are unreliable. In 2020, Meta’s competition for Deepfake detection software had the top solution score only 65%. Detection software and generative AI have both improved since.

2. It’s a cat-and-mouse race. The technology behind detection tools is the same used for creating Deepfakes.

3. Scale and timing: even if accurate, detection tools’ ex-post application means detection can only happen after content may have gone viral.

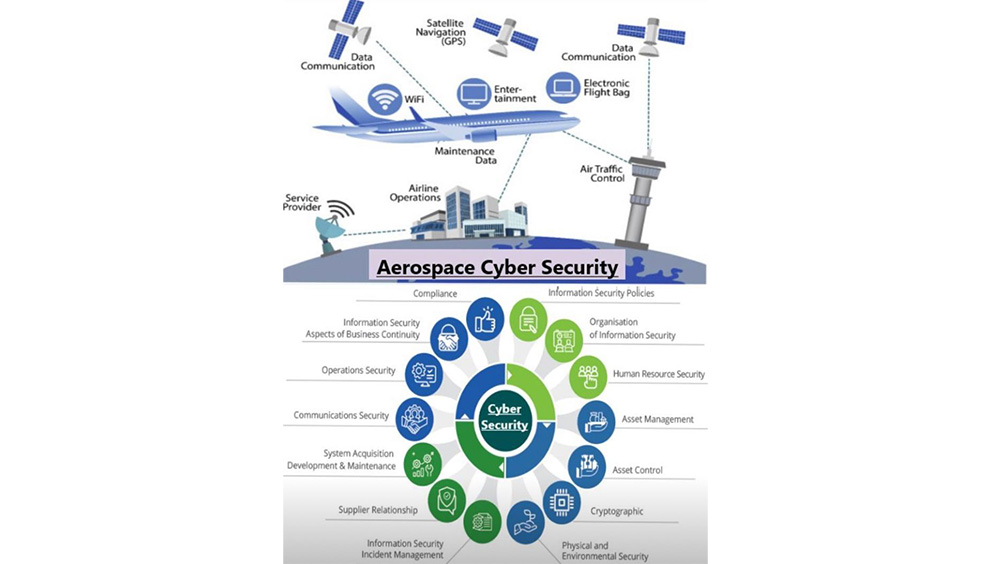

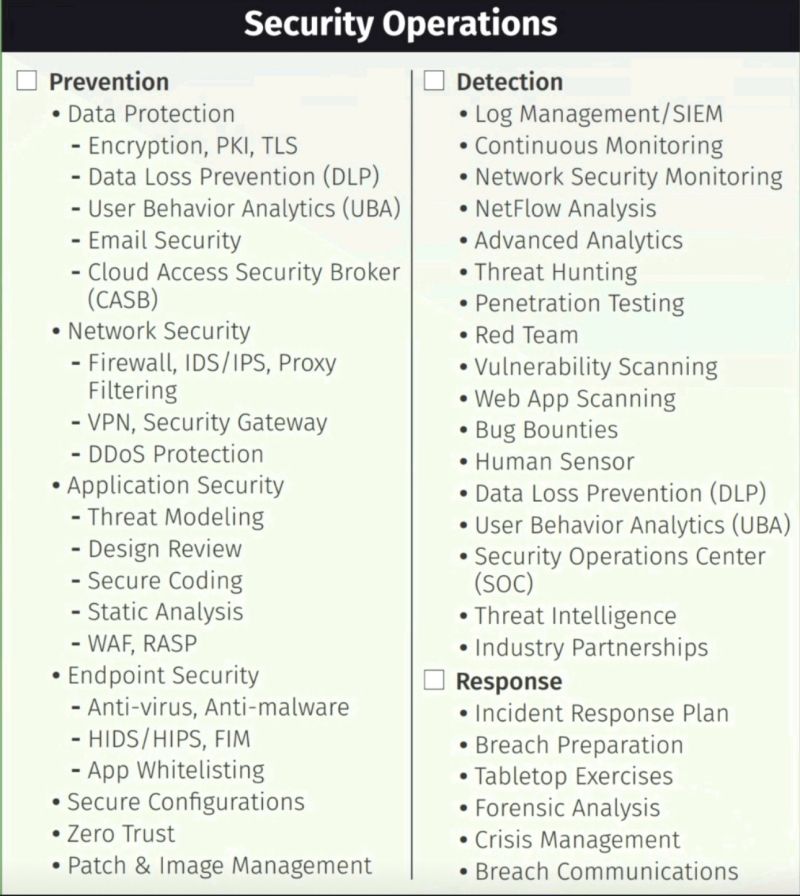

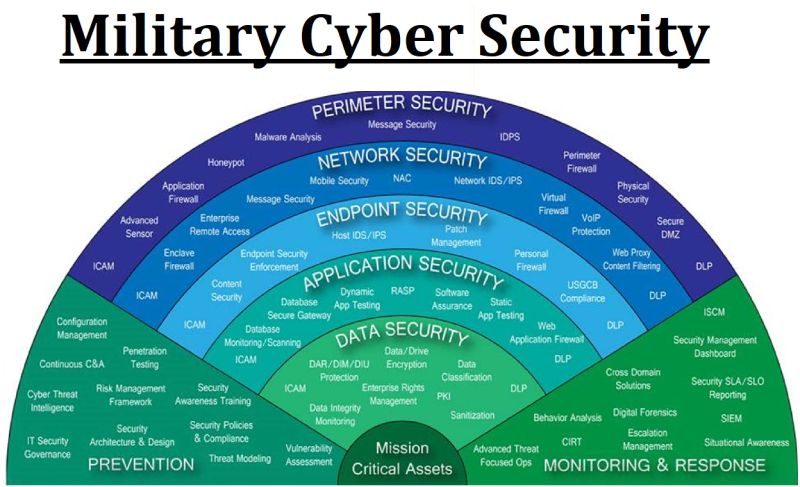

Technical solutions prioritising authentication at media creation seem more promising. There’s no silver bullet, but three approaches can help:

– Watermarking: Embeds invisible markers in digital media to authenticate ownership and reveal unauthorised usage. (e.g., Google DeepMind’s SynthID)

– Fingerprinting: Analyses digital content to create a unique identifier for tracking and detection.

– Cryptographic hashes: Generates a unique string from content as a digital signature to verify authenticity. (e.g., Truepic )

Beyond technical solutions, policy initiatives like the No Fake Act in the US, the EU AI Act and technical standards such as C2PA (Content Authenticity Initiative) are being adopted.

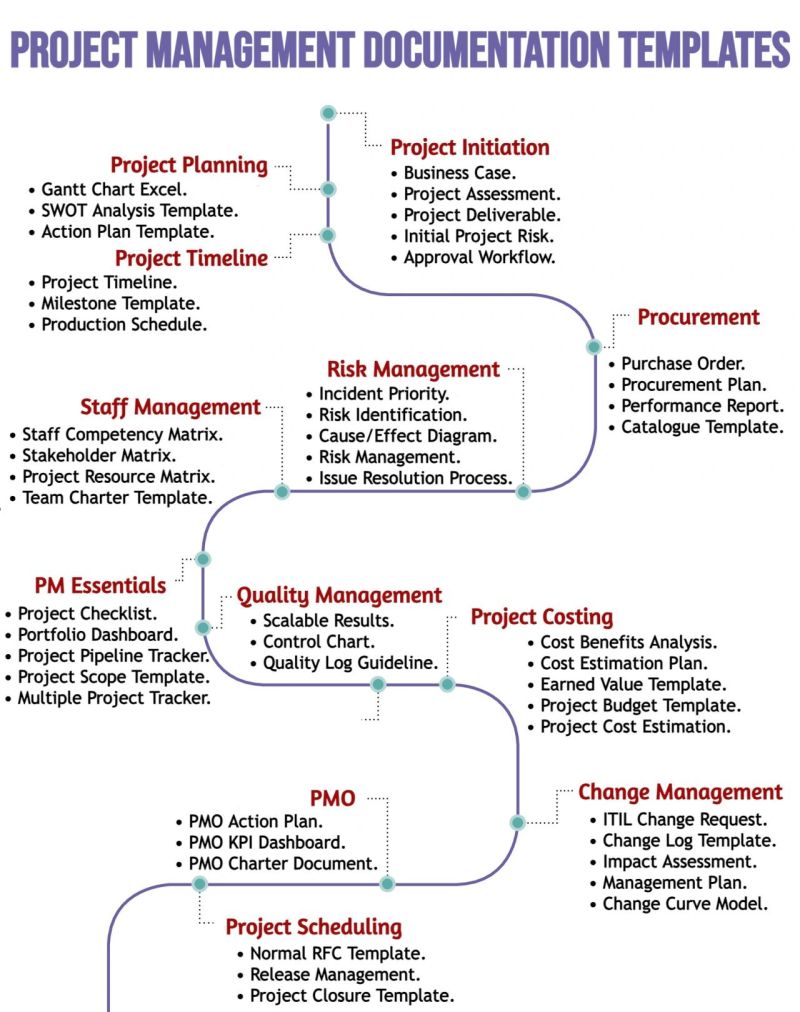

Yet, when working with different organisations, there additional steps we pursue:

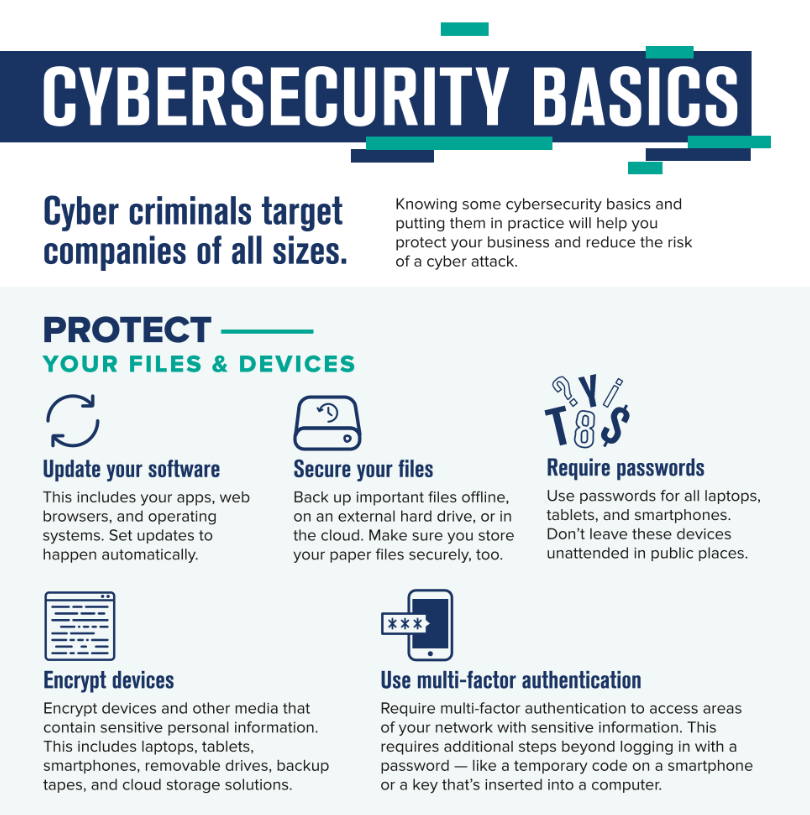

– Employee Training: Initiate training modules on Deepfake risks and escalation protocols.

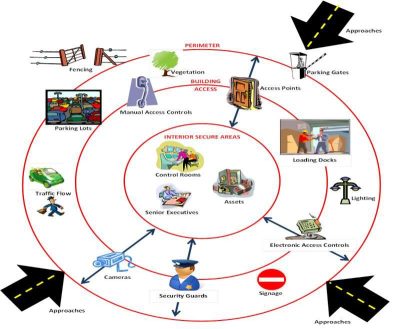

– Risk Exposure Assessment: Analyse your organisation’s vulnerability based on operations and business model.

– Enhanced Authentication Protocols: Implement robust multi-factor authentication to protect against Deepfake impersonations.

– Crisis Response Framework: Establish a crisis management strategy for Deepfake incidents, including rapid response to mitigate targeted disinformation.

It should be said that there is no silver bullet for protecting orgs against these risks, but a combination of these strategies can help mitigate risks and damages.

_______

#deepfake#AI#speaker#generativeai#disinformation#syntheticreality

Member Login

Member Login